Evaluation isn’t just for technical specialists. It can be a shared, creative, and powerful process that strengthens organisations from the inside out: helping teams learn, improve, and make better decisions.

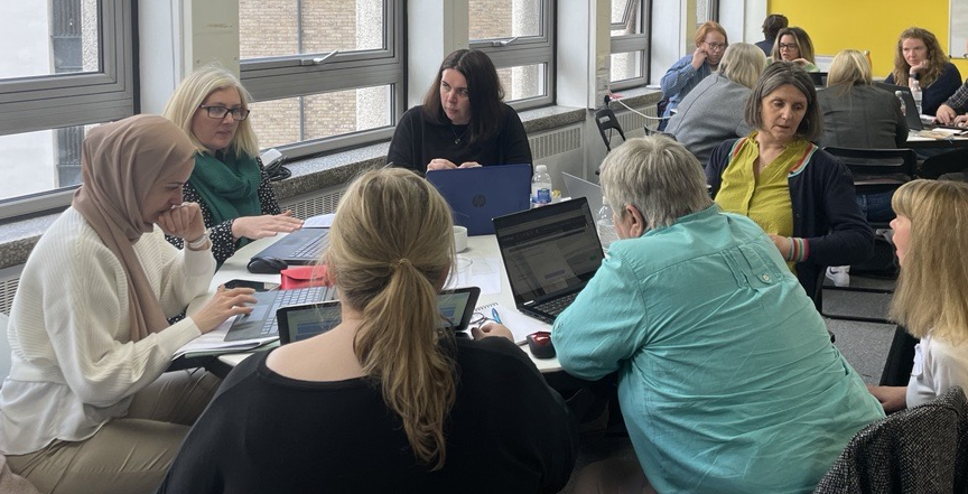

We often support organisations to host collective analysis sessions – bringing non-evaluators into the process of making sense of data and tracking progress.

Here are our practical tips for running your own sessions:

1. Be clear about the purpose and expectations

Before people enter the room or online space, be clear:

- Why are we gathering?

- What do we want to achieve?

- What is expected of each person?

Setting a clear purpose –like “analyse findings to shape our future actions” — helps people focus, adopt a more analytical mindset and feel they have an active role to play in making sense of the data.

You can also share a few examples of what you might do in the session (e.g. reviewing case studies, identifying themes, or checking how well your outcomes are holding up).

2. Demystify data and analysis

For many people, words like “data” and “analysis” can feel intimidating or remote. Start by explaining what you mean by data (for example, “the notes from your conversations” or “the number of calls logged”) and what analysis looks like in practice (“spotting patterns” or “asking what’s missing”)

Using plain language makes the process more inclusive and reduces the risk that people will defer to the “experts” rather than contributing their valuable insights.

3. Create a safe and inclusive space

People will only share openly if they feel safe and welcome to do so. This means:

- Valuing all views – including subjective experiences.

- Welcoming lived experience and frontline insights as legitimate sources of knowledge.

- Structuring discussions to make space for quieter voices.

Pay attention to group composition. Sometimes, separate sessions for staff, people who use services, or leaders make it easier for people to speak freely.

The Scottish Care team come together for collective analysis

4. Acknowledge and address bias

Bias – especially unconscious bias – is always present when we analyse data. Rather than ignoring it, create space to ask:

- How might our organisational position, lived experience, or assumptions shape what we notice (or ignore)?

- What steps can we take to minimise bias during analysis?

Prompts like “What tells you this is true?” can help people reflect on their interpretations without feeling judged.

Bias is a risk – but it can also offer useful insights if handled thoughtfully.

5. Keep it practical and hands-on

People engage more deeply when they are doing, not just talking.

Simple, tactile activities like highlighting key points, clustering ideas, or using online collaborative tools create energy and focus.

If you’re online, visual tools (like shared whiteboards, sticky notes, or live annotation) help keep things tangible, focused and interactive.

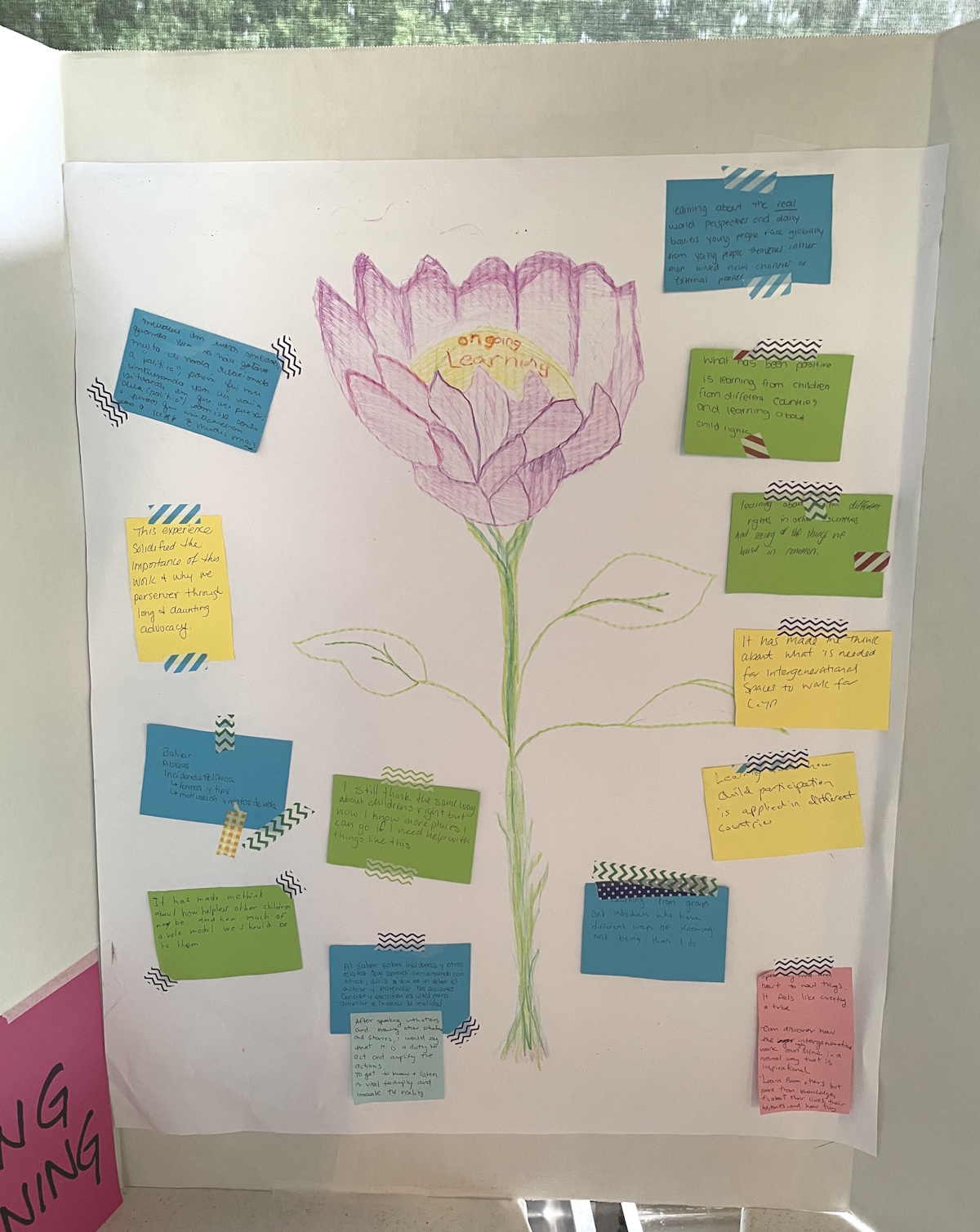

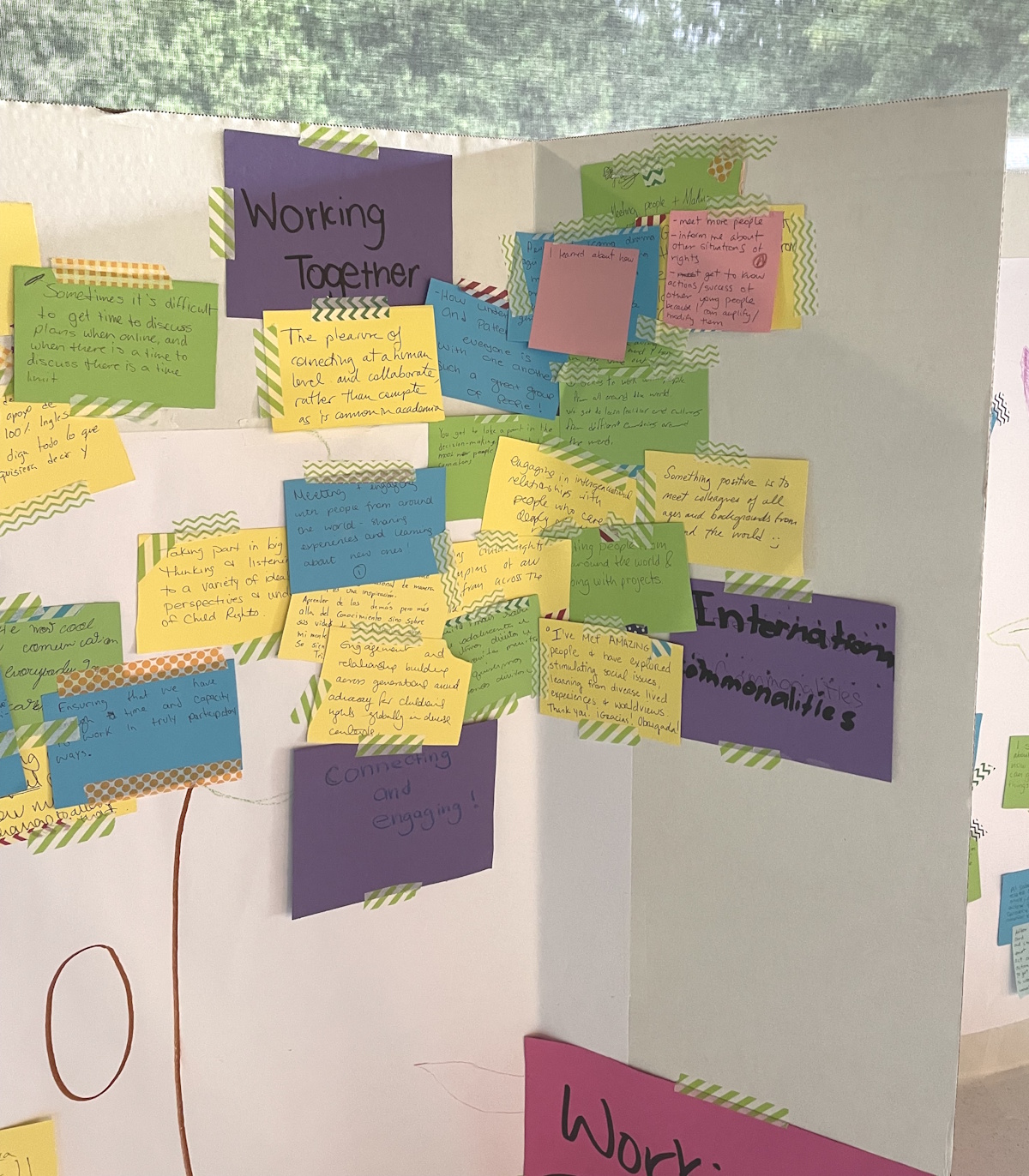

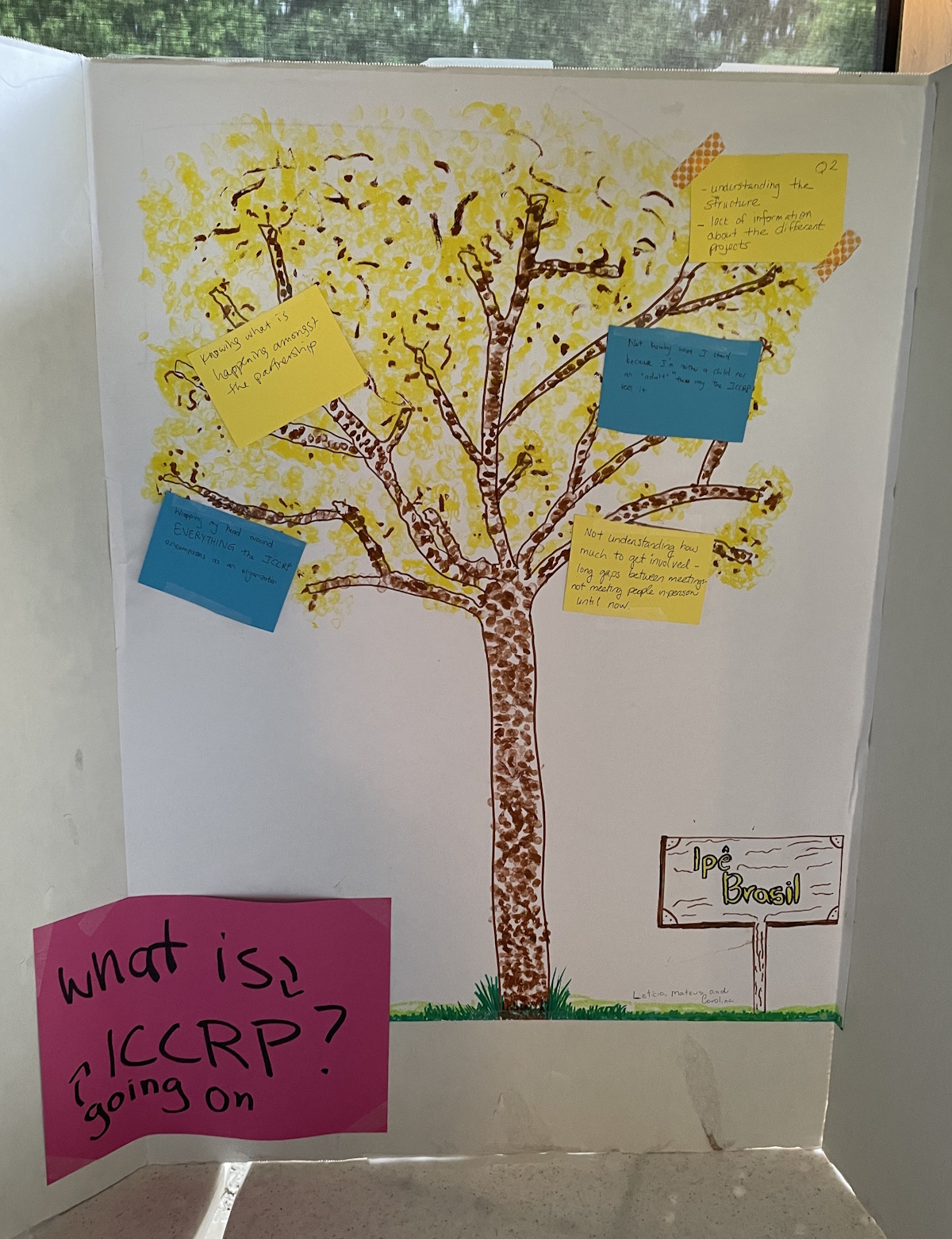

Outputs from a data analysis workshop with young people at the International and Canadian Child Rights Partnership

6. Think carefully about who’s in the room

Different perspectives makes analysis richer – but it needs thought.

- Avoid inviting senior leaders (like CEOs) if their presence might make others hold back.

- Mix roles thoughtfully to balance operational insights with lived experience.

- Consider whether some groups (e.g., people with lived experience) might need their own space first before bringing everyone together.

Who you involve — and how you involve them — affects the depth and honesty of the analysis.

7. Agree standards and processes upfront

To avoid confusion and conflict later, agree together:

- How will we judge progress?

- What counts as good enough evidence?

- What standards will we apply ?

Being clear about success criteria and decision-making processes early on supports rigour and transparency.

Document decisions clearly — not just the conclusions, but how you got there.

If you use OutNav, you can structure evidence standards and success criteria across your outcome map, which helps to keep analysis consistent and focused.

8. Ask good questions

Structure your session with simple but useful prompts like:

- What surprises you about this data?

- What patterns or themes do you notice?

- What’s being said — or not said?

- How do you feel about these findings?

- What tells you this is true?

These kinds of questions help people dig deeper and think more critically.

9. Support ownership, not dependency

When people help make sense of data, they connect with it more. They remember it. They see the stories behind the numbers.

Collective analysis shifts the culture from “data for the evaluation team” to “data as something we all use and shape”.

You’ll need to find the balance between supporting people and doing the technical work yourself. It’s a constant tension –but the more ownership you can foster, the stronger the culture of learning becomes.

10. Build shared understanding from the start

If people collectively agree success criteria and standards early on (e.g., when designing an evaluation map or setting up reporting templates), it makes later analysis smoother and more meaningful.

Shared framing at the start avoids surprises later and strengthens consistency across teams.

11. Rethink who collects data and how often

Not all data needs to be collected all the time.

Step back and ask:

- How often do we really need to collect this data?

- Who is best placed to collect it?

- What do we need to show progress?

This helps avoid “data treadmill” fatigue and keep evaluation focused and meaningful.

12. Celebrate success and learning

Use collective analysis not just to find gaps or shortcomings, but also to celebrate progress.

Highlighting where things are working well can build motivation and reinforces a positive evaluation culture.

Final thoughts

Collective analysis sessions aren’t just about working through data. They are opportunities to strengthen relationships, deepen organisational learning, and build a shared sense of purpose.

With the right questions and a safe place for open dialogue, everyone can take part, understand their impact better – and help drive it.