Our early adopters were outcome evaluation pioneers who helped us shape our approach and tested the first version of our software OutNav. Their feedback has been essential in us developing a great product and service.

We worked with the Thistle Foundation who were ambitious in mapping all of their organisation activities to outcomes. This helped them to think through what data, feedback an evidence they needed to support their learning organisation culture. They are using OutNav to monitor their training programme, and to understand how they influence the wider context in promoting personal outcomes as a way of working.

We are a learning partner for Health Technology Wales, a new organisation supporting the NHS in Wales. We helped them map their key strategies to the outcomes they and their funders care about. We are working together to understand the impact of their work, using OutNav to track their communications strategy and their evidence appraisal work.

Future Pathways offers help and support to people who were abused or neglected as children while living in care in Scotland and is taking a personal outcomes approach. They knew that demonstrating effectiveness was essential to the people who use their services, and to funders and collaborators. We have been working alongside them to understand and use outcomes to drive change, and to use OutNav to track their progress.

Starcatchers is Scotland’s national arts and early years organisation with multiple programmes and funders asking for information on different outcomes. We have been working with them to map different streams of work to outcomes, to help them embed outcome evaluation in their day to day work, and to evaluate an innovative programme of work with kinship carers and their children.

We supported HealthCare Improvement Scotland to streamline their approach to understanding outcomes, and the Focus on Dementia team are using OutNav to help monitor the success of their improvement programme with staff and carers.

We worked with a diverse team from Edinburgh Health and Social Care Partnership committed to finding a more meaningful way to report on the difference that services are making to people. They are using OutNav to evaluate the difference that their House of Care programme is making, as well as using the software to report on progress for the Edinburgh Long Term Conditions Service.

It was always a priority for us to establish a strong community of users around our approach and we’re really pleased with how this is growing and developing. We’re hugely grateful to everyone that has shared their learning with the Community – nothing beats the chance to learn from others who have been there before!

The Citadel Youth Centre is a community-based youth service in Leith, Edinburgh, that has been working for decades with disadvantaged young people and their families.

Matter of Focus has been working with Citadel to help evaluate their Big Lottery funded Families Project.

Workers have collected data from children and parents about what they think of the service and what they have learned and gained, but Citadel staff asked Matter of Focus to approach stakeholders as they felt a neutral outsider would receive more honest opinions.

It was agreed that we would devise and run a stakeholder survey online, and communicate with stakeholders to ensure neutrality.

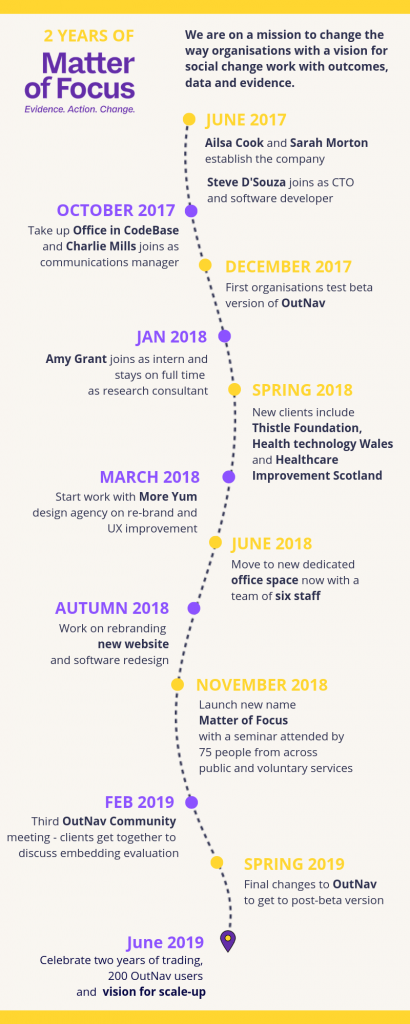

Following our approach, we used the Citadel stakeholder pathway in their outcome map to shape the survey questions.

We drafted survey questions that reflected the desired outcomes across the outcomes map. Initially questions focused on the ‘what we do’ column – asking stakeholders to comment on Citadel Families Project activities.

The survey then included reflections across the outcome map – looking at engagement, learning and capacity building, behaviour change and higher-level outcomes. We included some questions about the risks and assumptions associated with the outcome map – particularly around working with vulnerable children.

It was possible to ask questions directly relating to outcomes to these stakeholders as they were other professionals who have contact with the same families. They have a degree of professional judgement that can be useful to call on in this kind of evaluation.

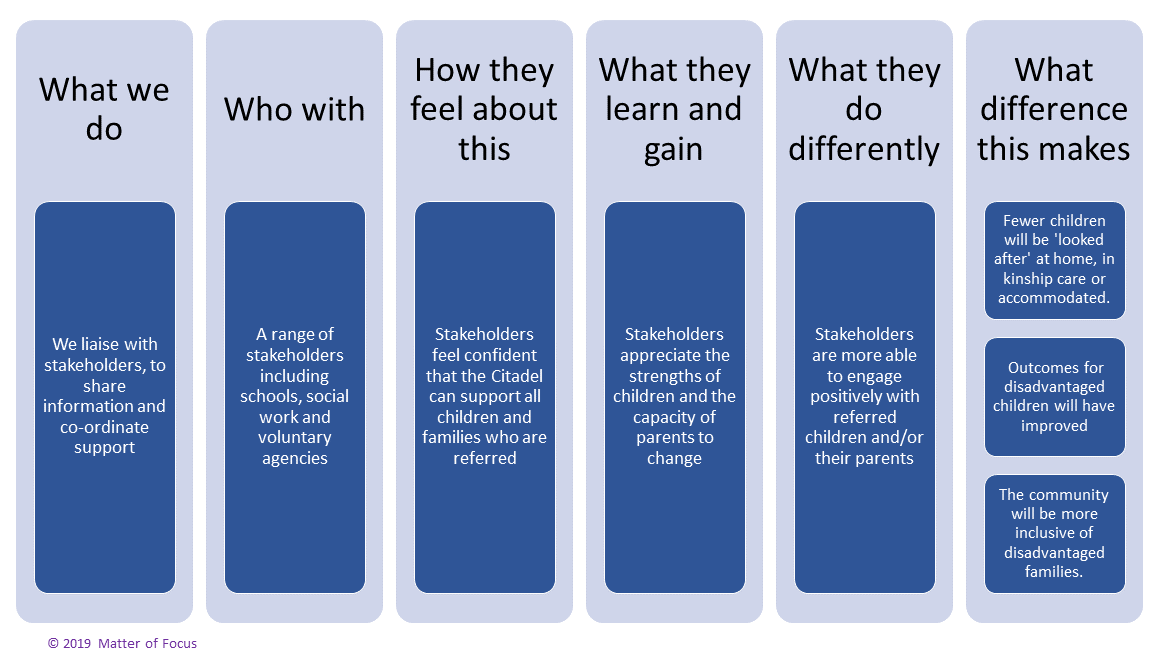

The survey asked whether stakeholders agreed that:

All but one of the stakeholders who responded felt able to comment on all these outcomes.

Only one of the high-level outcomes was included in a direct question, which was whether stakeholders thought that fewer children will be ‘looked after’ at home, in kinship care or accommodated.

The majority of stakeholders who took part in the survey either strongly agreed (59%) or agreed (30%) that children who attend Citadel are at less risk due to Citadel’s involvement. Two respondents neither agreed nor disagreed as shown below:

In order to further understand how Citadel has improved outcomes for children, when stakeholders were asked what was gained overall by working with Citadel, respondents stated;

”[Citadel] really enhances the support we can give to our children, building their confidence, resilience and improving their relationships with others”.

“Citadel are a valuable support to families and other involved organisations. The staff are very skilled at engaging children and their families and supporting them towards change.”

“Children have more self-esteem and confidence. The work from Citadel is invaluable and the support is hugely appreciated.”

Citadel staff are pleased to have gathered these views as further evidence of the effectiveness of their programme.

“These results look great. And I feel they have a validity to them coming from an external evaluator. We will be able to incorporate the results in our final project evaluation and refer to them in future funding applications. So, thanks to Matter of Focus for doing this.”

Andy Thomas the Citadel Families Project Manager

You can read more about the Citadel Families Project here.

If you have a well-defined outcome map with risks and assumptions, it can be quite simple to create a survey, interview or focus-group questions from it:

If you are a current OutNav client, we can offer support with carrying out interviews, surveys, focus groups or other data collection. Please contact us to find out about these services and related costs.

I was admiring the many interesting and nicely designed books on one of my ex-colleague’s shelves in her university office. So many great ideas, well-thought through, beautifully written, hours and hours of highly intelligent people’s intellectual effort. Great topics too – she is a social scientist so books on inequality, policy making, poverty feature on the shelf. And yet…

One of the reasons I left the university to work much more closely with public sector and voluntary sector colleagues, who are grappling with the difficult job of trying to solve some of the hardest social problems, is that I really care about making a difference.

That might seem a perverse statement, given that universities are in the public sector, and my company in the private. But somehow, all that effort to generate new ideas and publish them can seem a long way away from solving real problems.

It’s difficult to make an impact through the traditional and still dominant academic route of publish, share and influence, especially when the influencing work is generally unrecognised, poorly supported, and unrewarded in the university sector.

I was part of the lunatic fringe at the university: I didn’t really fit the academic mould, with one foot always firmly in the real world.

And although I got a PhD and published papers, some of which have been widely used, the rewards were always stacked on traditional scales. Lots of people really liked my ideas about increasing the impact of university research, but often they liked them more as ideas – there was never the real focus on developing the support, processes and systems to bring this to life across the institution.

So, I took the leap with a former university colleague, Ailsa Cook: to take ideas and firm them up into something practical and useable in the public and voluntary sector.

Reactions from university colleagues were mixed; some could really see why I wanted to go – I had always been innovative and trying to push ideas forward, but many thought I was crazy to leave behind security to risk it all on my ideas…

It has been exciting to get alongside some amazing clients, and to bring some robust thinking and well-worked methods to life in a different sector.

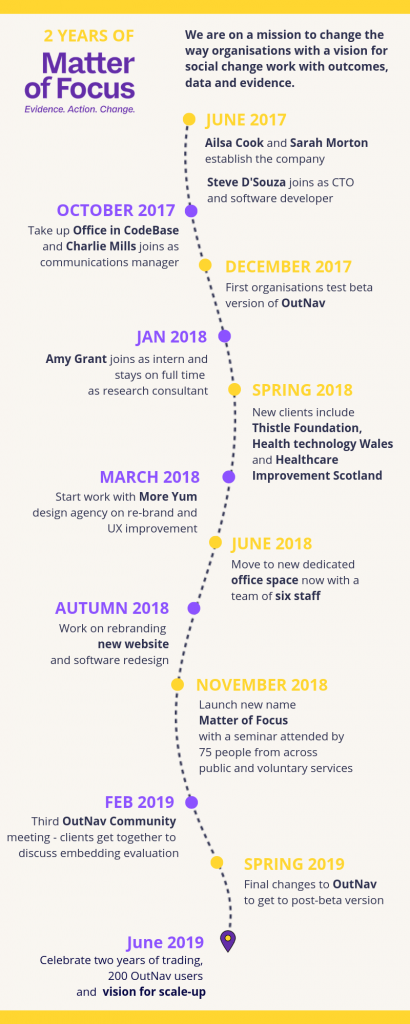

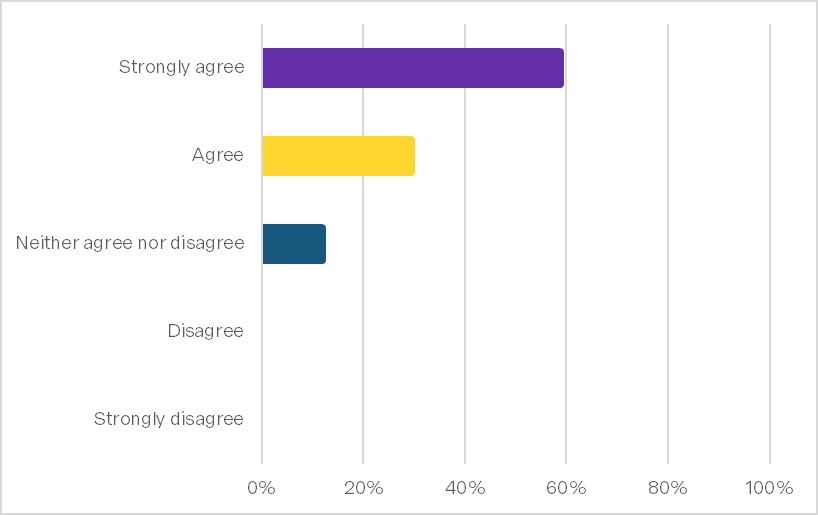

We have survived and thrived through our first year: we have a product, a website, paying customers and we’re now a core team of six.

It’s an amazing contrast to be in the fast-paced tech start-up sector, bringing creativity and innovation to a new process, thinking on my feet, learning fast and trying new things. The space for innovation is wide open, and there is no bureaucracy dragging us down. We have so much more control now; we can make decisions quickly and choose our own level of risk.

We are building a company culture that respects people and tries to bring out the best in them, but also recognises that they have a life outside work.

It has been especially important to us, as two female company founders, to create a family-friendly and diverse working environment.

We have also joined a growing band of mission-led companies, who care about making a difference, not just making a profit. So in some ways it feels like a great time to be bringing values-based ways of working into the private sector.

There are some things to miss of course; some lovely colleagues, amazing people doing their best to bring their knowledge to the next generation, and to build on and develop exciting ideas. Yes, there was a more regular pay cheque, a reasonable pension contribution and some financial security – although that was always tied to bringing in the next grant, competing with others to stand out as the most successful, and struggling to keep junior colleagues in work as they built their careers.

As I cycle back from the meeting with my University colleague to our lovely office with our small dedicated staff team, something she said sticks in my mind…

She was reflecting on how much she has got tied up in some of the bureaucracy of the department she works in:

“It must be really good to feel you are doing something worthwhile”.

“Yes”, I replied, “yes it is”.

In leading the study, I spent a couple of days with the Global Kids Online (GKO) team in London to map out how their work helps improve children’s experiences online, facilitating the team to express what outcomes matter to the programme.

It was a dreary, rainy and dark couple of November days in London when we met. However, the discussion was lively and we made great progress in setting out how the programme has been influencing countries around the world – working to understand and improve children’s experiences, positive and negative, of being online.

As usual for Matter of Focus, we started our work on this project with strategic outcome mapping. This is a participatory process of setting out how the project activities seek to contribute to outcomes using our headings.

Outcome mapping works best when a team can get together and spend time really thinking about and discussing how their work contributes to outcomes.

For this project we set out three main pathways where the project makes a contribution:

The Matter of Focus team, assisted by Alexandra Ipince from UNICEF Innocenti, will collate existing evidence and feedback against these pathways, and interview key stakeholders to capture more information on the impacts of the project over the last three years.

We have a small team working on this impact study, including associated researchers Helen Berry and Christina McMellon. We are looking forward to using our cloud-based software OutNav, to hold the project outcome maps and help us conduct collaborative analysis to unpick and understand the impact of the work.

We will be posting more here as the project unfolds and on Twitter @Matter_of_Focus

Read the Global Kids Online post about this impact study on their website.

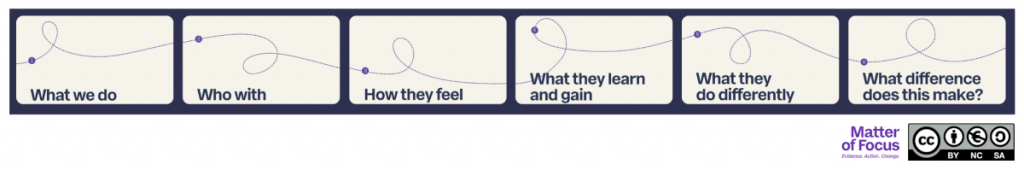

Over the last few years, we have refined an approach to understanding change into a simple framework that can be used by organisations that face these challenges.

This is a core element of our approach to supporting organisations and the basis of our software OutNav.

Our approach helps you to map your initiative’s activities through different levels of outcomes (or impacts) to understand how you make a difference. This creates a framework for communication, learning, evaluation and reporting.

We use plain language headings to help teams distil and interrogate the changes they seek to make with their public service initiative (this could be a project, programme, organisation or multi-organisation partnership).

This is a theory of change approach, which aims to uncover how these kinds of people-influencing projects really make a difference.

Unlike other similar approaches, our approach builds on contribution analysis, which puts people at the centre of change. That’s why we include a level of outcomes heading ‘How they feel’ – it ensures that the reactions of intended recipients of a programme, policy or intervention are included in understanding why and how change might happen. If people react positively to engagement then learning, behaviour change and wider benefits can flow from the programme. If not, then none of the intended changes can happen.

Using our approach can be a simple or complex process depending on the size, scope and ambition of your work.

Some more simple uses of the headings include, to:

When we work with an organisation, we bring people together to collaborate on creating a map of activities to outcomes using our headings; we call this an outcome or impact map. If their work is complex, multi-partner, organisation wide, or more experimental or innovative, they often need a lot of support to create an outcome map that will work for their organisation, communicate their work well, and form the basis for learning, evaluation and reporting. We have refined our expertise over the last few years and have developed a way of facilitating this process effectively for organisations.

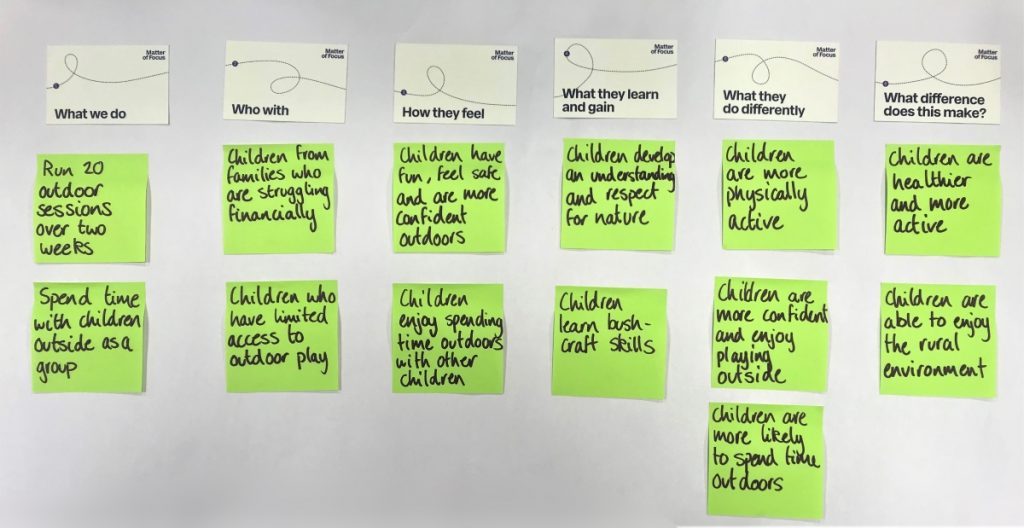

Try outcome mapping for one of the simple examples described above. You will need a set of our headings and some post-it notes or a cards.

Download a copy of our headings.

DownloadFirst, lay out your headings in order (1 to 6). Then, using the following prompts as guidance, create lists under each heading. It is important to use a separate post-it note or card for each item you write so that you can easily refine, replace and move them around.

What we do: Write down the key activities your project delivers.

Who with: Write down everyone who engages with these activities. This could include service users, staff and funders, for example.

How they feel: Write down the expected positive reactions people will have if they engage with the activities offered.

What they learn and gain: Write down the changes to their knowledge, skills and attitudes as a result of positive engagement with your activities.

What they do differently: Write down any positive changes to their behaviour and practice based on these changes in knowledge, skills and attitudes.

What difference does this make? Articulate your project’s final outcomes – what difference does it make if people change their behaviour in the ways you describe? This is where higher level outcomes like people being healthier, happier or better off can be included.

This approach is suitable for a range of purposes in outcome evaluation. Here are some examples to get you thinking about when you could use your outcome map:

Use our approach to set out how you think the intervention will work. Here you would use the headings as a framework for plotting out your intervention when you are writing a funding application or planning it.

Using the headings as a framework, you can create an outcome map that can be shared with stakeholders, service users and funders that clearly communicates how you make a difference.

Your outcome map can also be used to monitor what you’re doing, through reflection or feedback, learn from it and adapt and improve your project or intervention as you deliver it.

The outcome map can be used as a framework for evaluation. You can gather data against each item in your map and analyse it to report on your project’s achievements or to pass on to an external evaluator to scrutinise.

An outcome map really helps to create outcome-focused reports that demonstrate how you make a difference.

Co-authored by Matter of Focus Directors Sarah Morton and Ailsa Cook, this practical handbook sets out our approach and contains a host of ideas, principles and tools to help you understand and track the difference your initiative makes. Find out more about the book.

This post was originally published on Nov 28, 2017 and has been updated to reflect our progress since then!

I have always been interested in innovation – trying new approaches to solving problems, new ideas, new ways of thinking that result in better services, processes and policies for people and communities. I particularly enjoy bringing together different approaches from distinct sectors and watching the sparks fly as they interact and create new ideas. This has happened throughout my career: thinking about adult education and the arts for my MSc; bringing together the children’s sector with HIV organisations in a national development role; outreach into mental health services; and more recently, bringing real world experience and ideas into research to create more dynamic, outward facing and change-making research-to-action

Meeting Ailsa and building our company Matter of Focus has been charged with that same kind of energy and dynamism. Like me, Ailsa had kept one foot in academia and one firmly planted in the real world. Through her work on dementia, person-centered services and on how practitioners use evidence to create change, she had built wonderful expertise in understanding the potential of developing evidence that can be practical and capable of supporting real world change. When we met we instantly recognised a synergy in our approaches and values.

Bringing together our experiences has given us a clear focus on some unmet need in public services that we want to address. The three questions that many of our public service collaborators and clients struggle with are:

Over the past four years Ailsa and I have worked together on a number of different projects to develop new ways of helping organisations address these issues. We are on a mission to improve public services. The result is an approach that organisations are now using to develop, understand, map and evidence their pathways to outcomes. The approach is based on Contribution Analysis (a theory-based approach to evaluation) and strongly influenced by participatory and action research approaches. Organisations that make a difference in the world through working with people are particularly enthusiastic about our approach as it gives them tools to understand and drive change.

We have spent the last 18 months developing our innovative software OutNav to support our approach. The software:

Like many organisations, you most likely have some outcomes that are important to you expressed in your mission, programme plan, or agreed with funders. But do you know how your activities contribute to achieving these outcomes? This requires serious thinking about how your activities engage with the people and communities you care about, how they react, and what learning and capacity building is needed for change to happen. Your staff teams, service-user representatives, funders and stakeholders all need to agree on key activities, and pathways to key outcomes.

We work with organisations to map their outcomes to their activities. Often there is agreement and pathways are clear. This is especially so for new projects or programmes, or ones where work has been done to express clearly a theory of change. However, where an organisation has been working for many years for multiple funders, or where there is greater diversity in staff and stakeholders, or where there is less clarity about key organisational activities, then it can take some time for everyone to agree and be happy with a map of these outcomes, and with how their work fits.

In some cases outcome mapping may throw up issues about whole areas of activity and whether or not these fit with the organisation’s mission.

Having a clear picture of how activities map to outcomes is the framework we use as the basis for assessment, so for many organisations discussion is needed to get to a point where there is enough clarity to move on to thinking about how progress will be tracked.

Once clear pathways from activities to outcomes have been mapped, it’s time to take a look at what data you’re collecting and how this fits with your outcomes framework. In our experience this always throws up a lot of issues. Ask yourself this:

If you answered yes to one or more of the above – you are not alone. We commonly find these issues and we support organisations develop a plan for resolving them that creates the grounds for a robust outcomes assessment process.

Once clear pathways from activities to outcomes have been mapped, it’s time to take a look at what data you’re collecting and how this fits with your outcomes framework. In our experience this always throws up a lot of issues.

Our approach is pragmatic – there is no point in searching for perfect outcome measures. Instead we look at creating robust programme logic backed up by a mix of routinely collected and research-based measurement alongside specially designed data collection that together can tell a strong story of impact.

Having enough capacity to carry out outcomes assessment is an issue for many organisations. Some have internal research or analytical capacity that can support the process, but for many it is a case of trying to identify that capacity within their teams.

The practicalities of delivering programmes often absorbs most of the organisational capacity leaving little time for outcome assessment. It can also be challenging to find the time and space to think about evidence and how to analyse it to understand progress in busy delivery-focused organisations. We find these pressures are eased when organisations recognise the potential of outcome assessment for learning, improvement and effectiveness, as well as being more able to report to and attract funding, and prioritise it. It can also be helpful to start small – building capacity and apply learning from one part of the organisation to another.

We established Matter of Focus because we wanted to support organisations through these challenges. We work with organisations to map outcomes, help with data auditing and designing new feedback collection mechanisms, and our software OutNav helps structure and store relevant data, gives a framework for analysis.

The role of a knowledge mobiliser is to help others use different kinds of evidence to drive change. In March I introduced our company to the UK Knowledge Mobilisation Forum( UKKMbF) by taking a market stall at their 2018 gathering. Having attended in the past as an academic, this was the first time at the UKKMbF with my startup company director hat on, which brought a fresh perspective.

What struck me at the 2018 meeting was how much the conversation had changed from the previous three Forums. What was it that is different about how we think about and work on getting evidence into action?

It’s hard to convey the enthusiasm, excitement and sense of connectedness that was apparent across the two days of the meeting. Almost every coffee queue, table, breakout and session led to new connections and excited chatter. We definitely felt like we had found our tribe and I think the balanced mix of university-based researchers and staff, consultants, and various health, voluntary and other organisations really contributed to that. Somehow a more cohesive sense of being a knowledge broker and knowing what that was and how that related to others from diverse sectors was much more apparent than in previous meetings.

Running alongside the sense of connection, I thought, was a less pessimistic mindset about the challenges of getting evidence into action and more willingness to accept and work with the complexity that driving change always implies. This was particularly exemplified by the nodding heads to Dez Holmes’s presentation of the challenges her organisation faces working with practitioners to use evidence to improve practice. The need to consider leadership, culture, individual preferences and values really resonated with me and our approach. Whilst Dez’s message was that knowledge mobilisation is hard, people seemed encouraged and enthused rather than put off.

Dez also emphasised that evidence use is based on relationships – one of my favourite themes! But she wasn’t the only one. Throughout the Forum there was a real emphasis on relationship building being core to knowledge mobilisation. This included an emphasis on skills and approaches that are often considered softer (but also better for working with complex systems); listening, flexibility, trust-building, a mix of data and evidence of different kinds, creativity, qualitative reflections. There was a focus on processes rather than on types of evidence. This relational emphasis seemed to be accepted and assumed, and so the conversation was about how to share, reflect and develop these approaches to improve knowledge mobilisation practice.

Finally, the innovative engagement approaches embedded in this forum helped to reflect the creativity and innovation that many people attending are driving in their organisations and settings. The use of open space, social media, creative approaches and small groups really helped to create a collegiate atmosphere, and many more opportunities for connection and sharing than traditional conference formats. Well done to the conference organising team – you were amazing.

I think there are three things that strike me overall.

Through our work with public service organisations we have identified seven steps that organisations need to take to understand how successful a project or programme is. These steps are also important to help the processes of planning, learning, reflecting and monitoring. They can also provide a foundation for ‘telling the story’ of how change happens, to funders, service users or other stakeholders.

Understanding the what kinds of outcomes you are seeking and why is essential. This means interrogating and thinking through the outcomes that are important to your programme or organisation and why. What are the outcomes for? How do they help focus the work of the organisation or programme? (Take a look at our earlier post Why focus on outcomes?).

Next you need to understand how the activities your organisation or programme offers lead to these outcomes. This is key to achieving them and judging their success. We have built on work by Steve Montague1 to understand the change process. Our approach to mapping a contribution to outcomes means understanding how the people who have engaged with a programme or organisation feel, what they learn and gain, and how this leads to changes in behaviour and practice, or increased capacities. This approach builds a solid basis from which to understand and judge improvement in outcomes for people and communities.

Learning, improvement and innovation are all important in public service delivery and development. It’s important to build on what is known to be successful. That knowledge could come from existing research, from evidence developed through practice, or by looking at what other similar organisations or programmes have done in the past. Both ‘what works’ type evidence and thematic reviews can be really helpful for this (for example the Centre for Research on Families and Relationship’s About Families project). This knowledge can help refine the thinking behind the overall approach, and to adapt it if necessary.

Are you drowning in data and evidence collected through routine activities? Many organisations are; data about service-users, referrers, exit data or evaluation. Bringing this together to tell the story of how your organisation or programme makes a difference for people and communities can be challenging. Assembling existing evidence against an outcome map is a good basis for building this story.

It’s often the case that existing evidence can say something about how many people have used a service, but less about what difference it has made for those people’s lives, or how change has happened. Four questions are important here:

Once you understand the gaps in evidence, address them to create a more complete and robust picture of how change happens. At Outcome Focus we believe that any new data collection approaches should reflect the perspective of the people who have the most insight into how it works. That would include service-user feedback that is collected and used in a way that’s appropriate and proportionate to the people and programme/organisation in question. But importantly it would also include thinking about how to formalise the insights of practitioners who deliver the service. One of the tools we have developed is a reflective impact log that captures practitioner thinking, alongside a group process to refine that evidence and use it more formally.

In a time of shrinking budgets and entrenched inequalities, the tide seems to be flowing away from external, standalone evaluations that are often quickly forgotten about and left on the shelf. Instead, organisations and programmes are becoming more adept at self-reflection, and attuned to learning as they develop to enhance the chance of success. This needs to be supported by organisational structures that enable evidence gathering, reflection and discussion, and processes to ensure everyone has opportunities and clear criteria on which to reflect and judge progress. These processes of internal evidence-use can help to ensure that working in an outcomes-focused way is embedded into organisational culture.

At Matter of Focus we offer strategic support to understand and map outcomes, alongside our cloud-based software OutNav that can help organisations embed this way of working in their culture in order to be able to plan, learn, reflect and report on their outcome based work.

1Steve Montague (2008) Results logic for complex systems. Performance Management Network Inc. pdf download